How LogSeam Searches 500 Million Logs per second

Ty the tiger collaborating with LogSeam on next-generation log analytics.

TL;DR

- LogSeam provides a security-focused data lakehouse and AI-driven anomaly detections built to reduce time-to-response to critical incidents.

- LogSeam is designed for scale and is capable of searching over 500 million logs per second, delivering over 1.5 TB/s of throughput, all while cutting costs by 40–80%.

- LogSeam ingests JSON logs that are reformatted into efficient Parquet files, reducing storage by up to 100× and optimizing sequential scanning.

- Tigris enables LogSeam to reduce latencies, increase throughput and to distribute data globally, placing it close to query origins to minimize latency, all while cutting down cost.

For incident responders, log search isn’t just a tool — it’s the clock ticking down on containment. Every second waiting for a query is an extra second of stress during an active incident. LogSeam is the security data lake house built to turn massive log volumes into sub-second answers. Its AI-driven features add another layer: autonomous agents that continuously scan logs for anomalies, and natural-language threat hunting that makes querying intuitive. Faster queries, plus smarter detection, net you faster response and fewer sleepless nights doomscrolling your status page.

LogSeam searches through 500M+ logs/sec, exceeding 1.5+ terabytes/sec throughput. Just drop files into a bucket, and the system handles the rest. No patchwork of 15 services. No worries about scale or throughput. Deploy anywhere: in LogSeam’s managed service or inside your own cloud.

Faster Queries over Bigger Data

LogSeam builds a security data lake house for your logs, cutting through the mass of ingestion and querying tooling to provide a simplified tool that does it all. This single pane of glass lets you see what’s going on, add natural language filters for your apps, and trace your complicated systems as threats evolve. Every login attempt, API call, firewall hit, and user click gets centralized in LogSeam so that you can stay ahead of issues before they happen.

All your historical data is hot, ready, and easy to search because storage is scaled independently (and cheaply, due to object storage). If there’s a breach or insider threat, you can go back and trace it in detail, not just hope your logs weren’t already deleted. It’s a game-changer for compliance, fraud detection, and AI-driven anomaly spotting. LogSeam provides provide per-tenant, independently scaling compute layers to power fast queries, backed by a globally distributed data lakehouse.

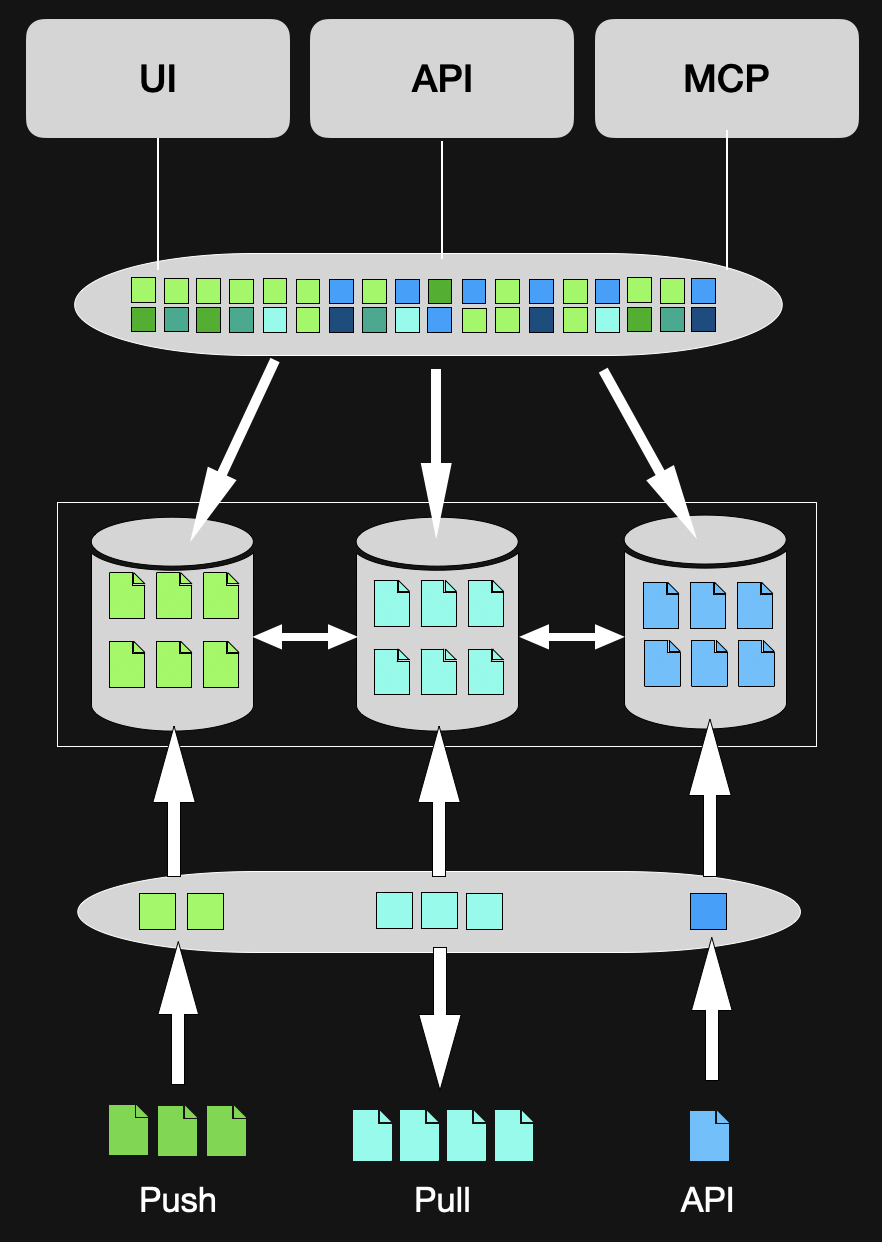

The Architecture

Log files are an example of the lots of small files problem in storage. Small files require special handling to avoid overloading metadata stores and dropping throughput due to request volume.

When you’re doing log analysis, you get small chunk files which are the Achilles’ heel of log file analysis. What’s novel is we put the logs into the exact size file format, arranging and compressing them, as we put them into Tigris. 50+TB of logs per day is no problem. You don’t need 15+ services to ingest logs.

On ingestion, LogSeam refactors your billions of JSON logs into efficient, columnar Parquet files and applies Zstandard compression to cut log volume by 100x. This dramatically reduces storage size through compression and optimizes logs for sequential scans, so queries run faster and at lower cost. Logs are written into globally distributed storage, positioned close to where they’ll be queried. The result: terabytes of history searchable in seconds.

When it’s time to search, LogSeam spins up elastic compute dedicated to each query. Dozens or hundreds of nodes can each sustain multi-GB/s reads off the backing object storage. Once the job is done, compute shuts down so cost maps directly to usage. Analysts and AI agents never contend for resources, which means consistent, predictable performance even during peak investigations.

Files are organized for sequential access and stored in Tigris, which keeps small objects inline and keys ordered so sequential reads map to contiguous storage blocks. The result is that query engines can coalesce millions of small reads into large, efficient network operations. This is exactly what LogSeam’s compute layer needs to sustain multi-GB/s per node.

Global storage that’s actually global

Threats don’t respect geography, and neither should log search. When your compute jobs are global, your logs are global too. LogSeam’s global replication ensures an analyst in Sydney gets the same sub-second results as a peer in New York, without cross-region delays or hidden costs.

When LogSeam needed a global object storage solution we looked far and wide. One day we found Tigris Data and what happened next was short of amazing. We found a Globally Performant Object Store that could scale with us, instead of working against us. We pushed over 1.5 TB/s (that's a big B) of search using 20 commodity compute engines searching over 500 Million logs per second....And all that with global replication and data protections.

Oh and they are just great to work with.

Conclusion: Search, Unlimited

The result is a log search architecture that does not require trade-offs between cost, speed, and scale. It eliminates the tangle of services traditionally needed to make object storage viable for analytics, replacing it with a streamlined path from ingestion to query.

For teams operating at scale — whether supporting Incident Response investigations across continents or enabling AI-driven log analysis — LogSeam’s design provides the performance headroom to keep up with the data you already have, and the flexibility to handle what is coming next.

Check out what makes LogSeam so fast

LogSeam proves what Tigris can do. Now put Tigris behind your own stack and never worry about scaling or performance again.