Autumn trees on a dusty road in Magoebaskloof, South Africa. Photo by

Garren Smith, iPhone 13 Pro.

Tigris now supports object notifications! Object notifications are how you

receive events every time something changes in a bucket. Think of it as your

bucket's way of saying "Hey, something happened! Come check it out!", much like

the inotify subsystem in Linux. These

notifications can be helpful for keeping track of what's going on in your

application.

Use Case: Automatic Image Processing

Imagine you're building a photo-sharing app. Every time a user uploads a new

picture, you want to automatically generate a thumbnail and maybe even run it

through an AI to detect any inappropriate content. With object notifications,

this becomes a breeze!

- User uploads an image to your Tigris bucket.

- Tigris sends a notification to your webhook.

- Your server receives the notification and springs into action.

- It downloads the new image, creates a thumbnail, and runs it through an AI

check.

- The processed image and its metadata are saved back to Tigris.

All of this happens automatically, triggered by that initial upload.

Behind the Scenes: Building Object Notifications

Now, let's pull back the curtain and see how we built this feature and a few

tricky situations we had to handle. Grab your hard hat, because we're going on a

little tour of Tigris's inner workings!

Tigris isn't just any object store – it's a global object store. This means that

objects can be changed in multiple regions around the world. This makes them

available in multiple regions, always ready when you need them. But means we

need a way of keeping track of all the changes for the same object. This is

where replication comes in.

Replication: Keeping Everyone in the Loop

To make sure everything stays in sync, we replicate changes to multiple regions.

This ensures high availability and improved redundancy of our objects.

The caveat to this is that replication is a background task, and the speed at

which an object is replicated from one region to another can be affected by many

external factors.

To solve this, when a change is received at a region it looks at the

Last Modified timestamp of the metadata to determine if the change is new and

needs to be applied or if the region has already seen a newer change. It will

discard the change if it is old.

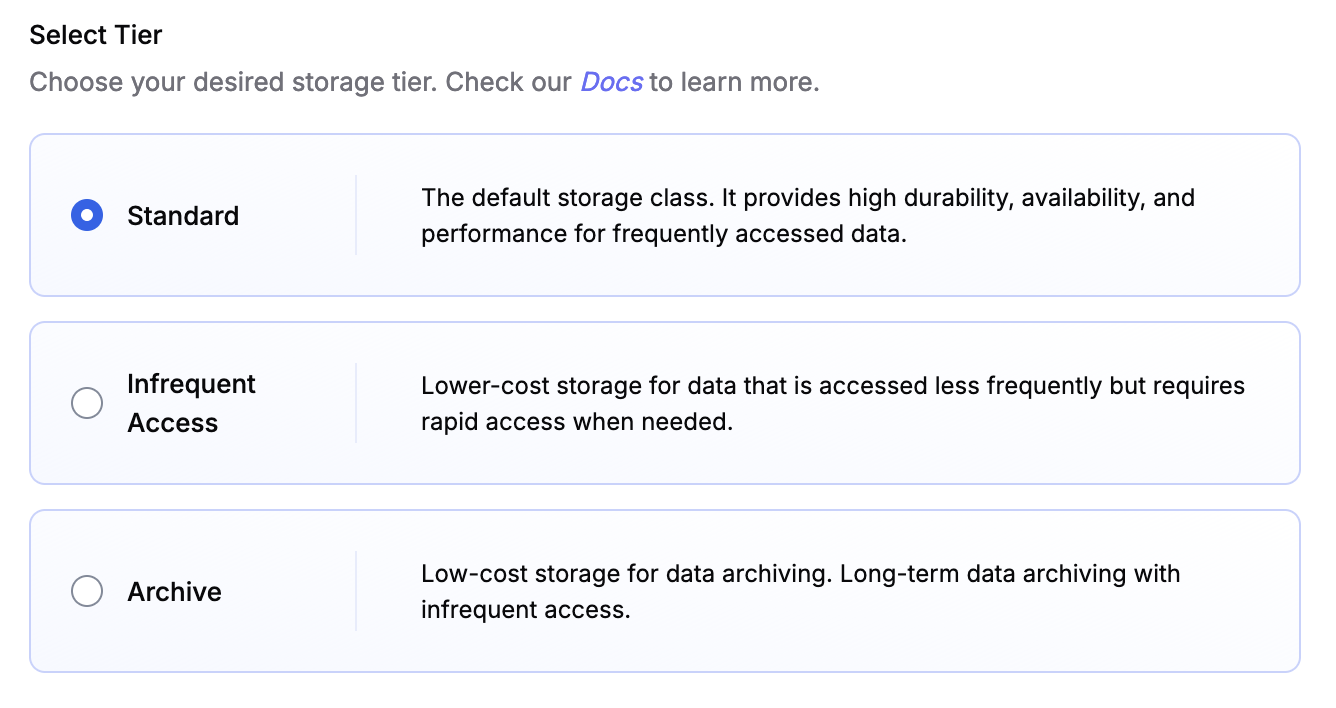

Want to try it out?

Make a global bucket with no egress fees

The Object Notification Hub

When object notifications are enabled for a bucket, we assign one region to be

the object notification hub for that bucket. This region gets the important job

of keeping track of all the changes. We create a special index which is very

similar to a secondary index in that region's FoundationDB. We order the changes

by FoundationDB

Versionstamp,

when the change is added to the index, and Last Modified timestamp of object

metadata.

The Versionstamp helps the worker keep track of which events it has seen and

processed.

Why one region you may ask? If we didn't do this, we end up with multiple

regions sending the same events to the webhook, hello friendly DDos attack, or

having to build a complex system to try and co-ordinate the regions so they

don't send duplicate events.

The Background Task: Our Diligent Messenger

In our object notification region, we have a background task running. Think of

it as a tireless worker that's always on the lookout for changes. Every so

often, it checks the special index we mentioned earlier, collects all the latest

changes, and sends them off to the webhook.

The worker will also keep track of the last processed change and will retry a

few times if the request failed. Finally it will remove old changes from the

index that have already been processed.

Why We Can't Guarantee Ordered Events

We talked about how object changes replicated from many regions can take

different times. The problem arises when the worker is ready to send the latest

events for an object. It has no way of knowing if all changes for an object have

been replicated to its region. It could in theory contact every region and

check, but this would be prohibitively expensive. And still not a complete

guarantee.

This forces us to make the trade off of sending events out of order. The worker

will read the latest list of changes that have been replicated to the region and

send them to the webhook.

Wrapping Up

That's how we built object notifications in Tigris. We took a global system,

added some global replication, threw in a change index, topped it off with a

hardworking background task.

The result? A system that keeps you in the loop about what's happening in your

buckets, no matter where in the world those changes occur. Whether you're

building the next big photo-sharing app or just want to keep tabs on your

storage, object notifications have got your back!

We hope this peek behind the scenes was fun and informative. Happy coding!

![Tigris tiger watching a beam from a ground satellite. Image generated with Flux [dev] from Black Forest Labs on fal.ai](/blog/assets/images/beam-372234260535bbf608d61b6c1ab34715.png)

![A nomadic server hunting down wild GPUs in order to save money on its cloud computing bill. Image generated with Flux [dev] from Black Forest Labs on fal.ai](/blog/assets/images/nomadic-compute-78b3795789f3860483e46f565f6841c1.webp)

![A library with a fractal of bookshelves in all directions, wooden ladders connecting the floor to the shelves. Many blue tigers tend to the books. — Image generated with Flux [pro] 1.1 from Black Forest Labs on fal.ai](/blog/assets/images/archive-tigers-ec99e51eaeb6efe06ba75b91d0a00e0a.png)